Share Europe Network Association Finishes Landmark Aerial-Photogrammetry Training in Austria

- Share Europe Network Association

- Sep 1, 2024

- 2 min read

Updated: May 21, 2025

August 2024, Austria — Certified drone pilots from the Share Europe Network Association (SENA) spent a week in the Austrian countryside honing advanced aerial-photogrammetry skills, adding a fresh chapter to the organization’s growing portfolio of heritage-documentation projects and educational resources.

From Classroom to Cloud

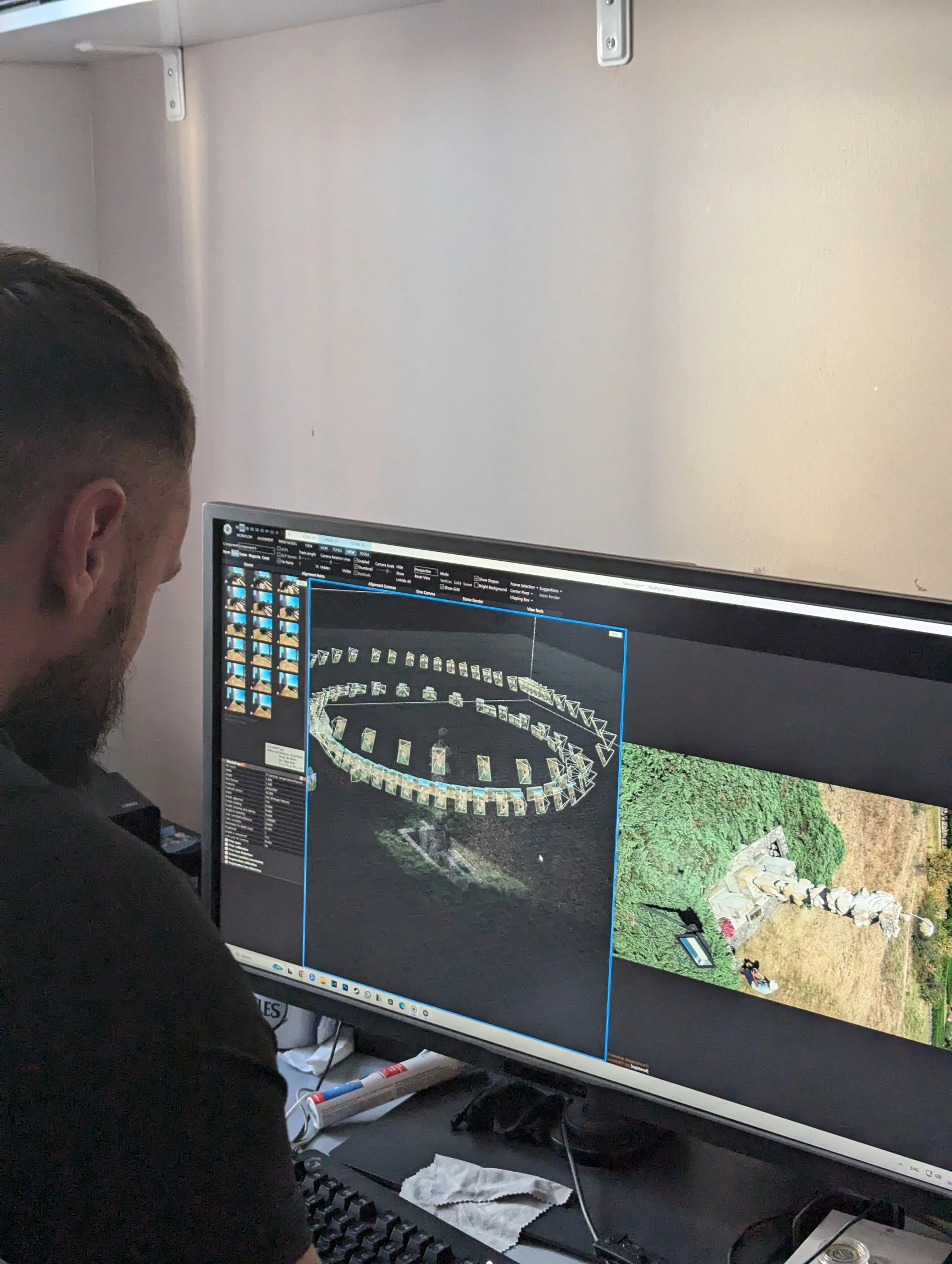

Hosted by Austrian aerial-mapping specialists, the training blended morning seminars on flight-path planning, camera-sensor calibration, and 3-D-reconstruction theory with afternoon field sessions. Participants rotated through flight crews, each responsible for pre-flight checklists, mission execution, data off-load, and ground-control logging.

Flying Labs Over Historic Landmarks

Two signature sites became living laboratories:

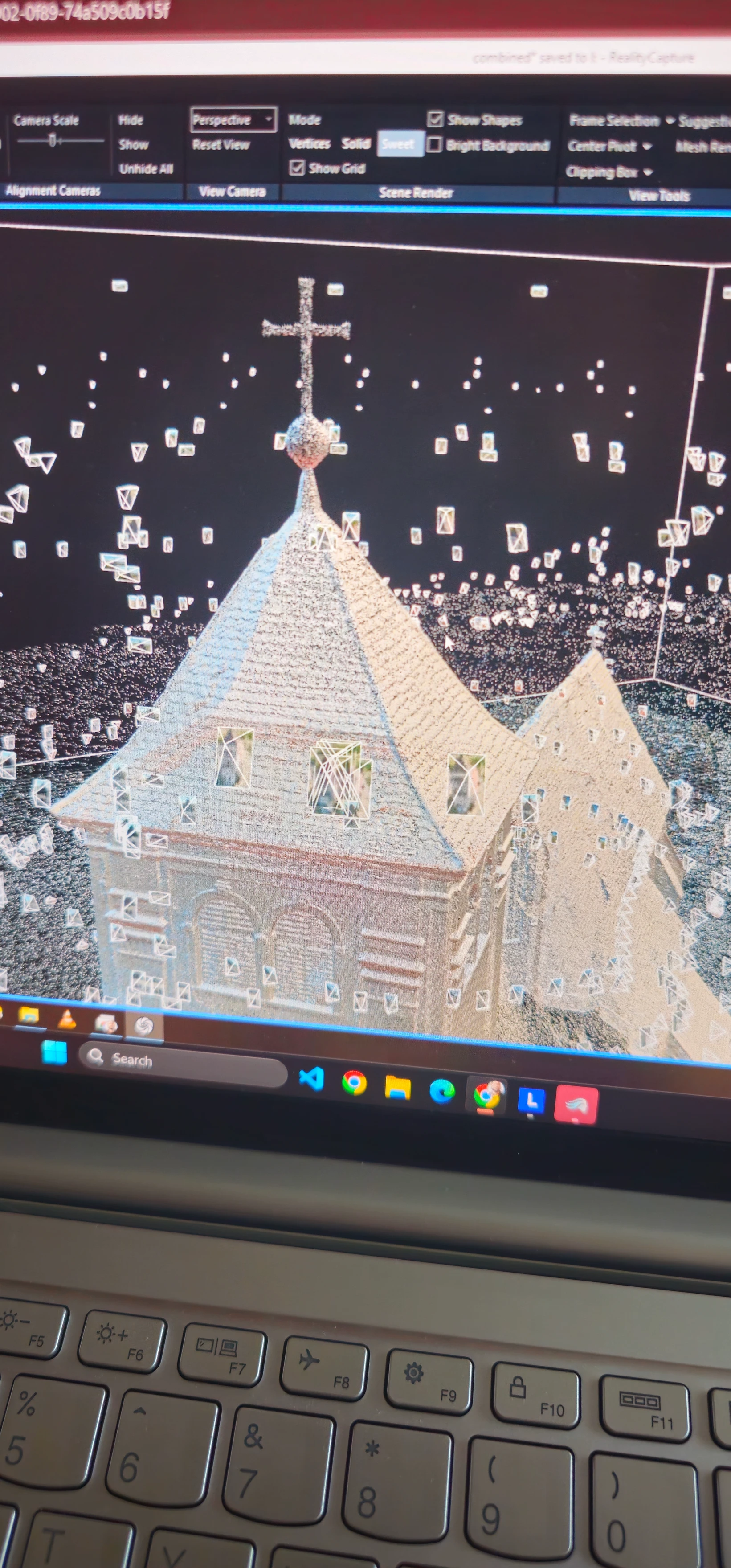

Katholische Kirche Wartberg (St. Leonard) — Pilots executed concentric “double-grid” and “corkscrew” orbits to capture the baroque church’s steeple and flying buttresses from every angle, generating a dense point cloud of more than 45 million points.

Grünes Lusthaus — The team experimented with low-altitude “ladder” passes to preserve roof-tile textures and the surrounding tree canopy, critical for shadow-free orthomosaics.

Beyond these headline locations, crews mapped smaller artifacts—stone bridges, vineyard terraces, and even an 18th-century sundial—demonstrating the versatility of photogrammetry at multiple scales.

Gear That Made It Possible

Gear | Role in Workflow |

DJI Air / DJI Mavic | High-overlap aerial mapping & oblique capture |

Full-frame DSLRs | Close-up façade plates; precision detail patches |

Insta360 action cams | 360° passes to define the object’s full bounding box and fill occluded areas |

Pixels to Polygons: The Software Pipeline

Step | Tool(s) | Key Settings / Notes |

Frame extraction | VLC Player | Batch snapshot every few frames |

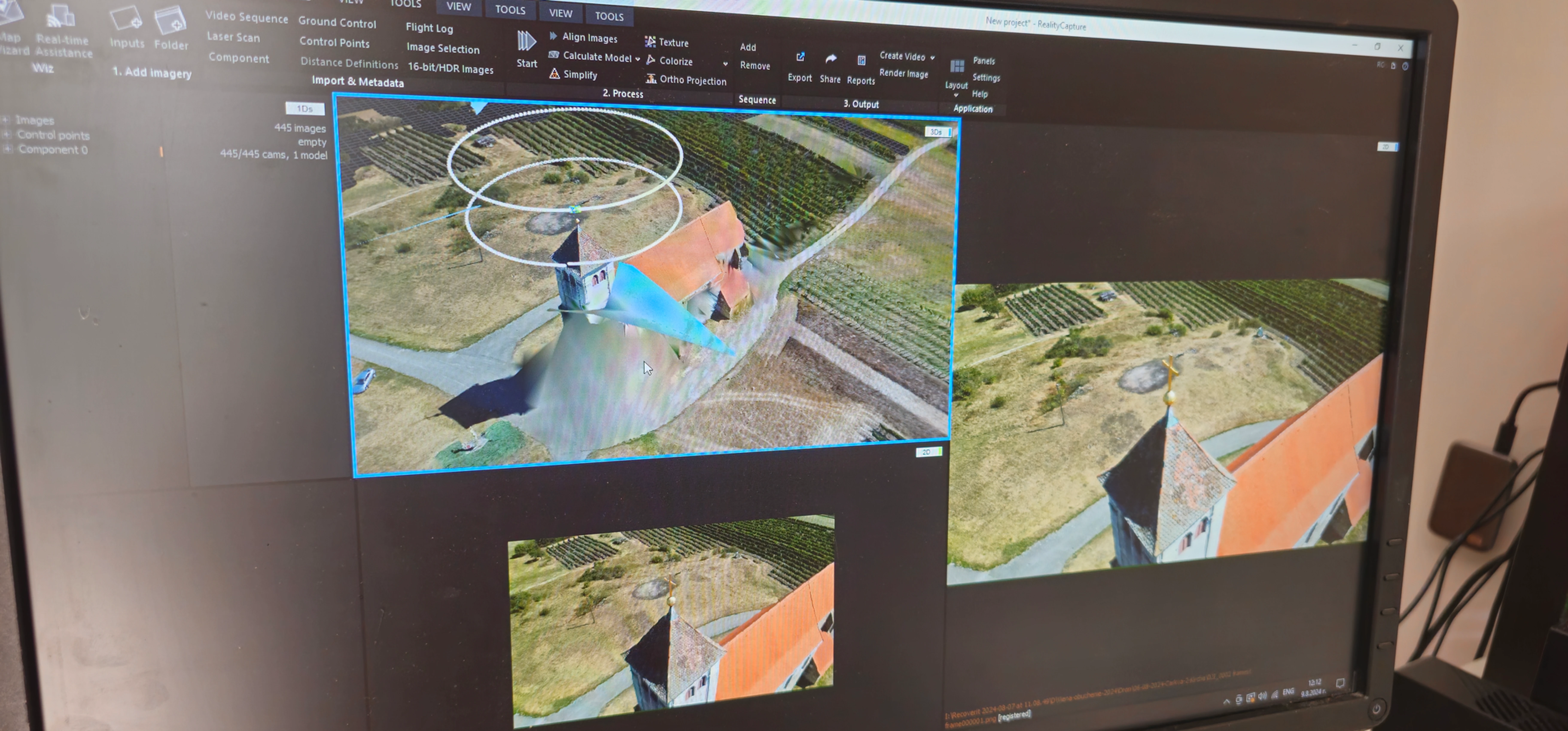

Alignment & sparse cloud | RealityCapture | Pair-selector at 75 % overlap; geotag-aware alignment |

Dense reconstruction | RealityCapture | No-filter mode for fine stonework; depth maps at “high” |

Texture baking | RealityCapture → Photoshop | 8 K PBR maps; dust-spot clone stamping; global colour balance |

Model QA & export | RealityCapture | Watertight check, normal-flip audit, GLB export for AR/VR |

Unified, High-Fidelity 3-D Models

Every photograph—whether captured by drones, DSLRs, or extracted from Insta360 video footage—was imported into RealityCapture as an individual still image. The software performed automatic feature detection and used Structure-from-Motion (SfM) to solve for camera positions and orientations, aligning all images into a single coordinate system. When available, on-site ground-control points (GCPs) were used to georeference the model, ensuring centimeter-level accuracy. A dense point cloud was then generated, capturing the detailed geometry of the sites. The inclusion of frames extracted from video footage provided additional images from various angles, enhancing coverage and helping to reconstruct areas that were difficult to capture with still photography alone. From the point cloud, a triangular mesh was created, and textures were baked using the high-resolution still images, resulting in visually rich, photo-realistic models.

Comments